Pose Estimation

Introduction

In recent years, pose estimation has emerged as a significant breakthrough in the field of computer vision. It refers to the task of estimating the position and orientation, or pose, of objects or individuals in an image or video. With the advancements in deep learning techniques and the availability of large datasets, pose estimation has become a pivotal tool in various applications, including robotics, augmented reality, human-computer interaction, and sports analysis. This essay explores the concept of pose estimation, its challenges, and its real-world applications.

Understanding Pose Estimation

Pose estimation involves determining the spatial position and orientation of an object or individual relative to a reference coordinate system. In computer vision, this is achieved by analyzing visual data, such as images or videos, using mathematical models and algorithms. The goal is to accurately estimate the pose parameters, which often include translation, rotation, and scale.

Types of Pose Estimation

2D Pose Estimation: 2D pose estimation refers to estimating the pose parameters in a two-dimensional image or video. It involves identifying key points or landmarks on the object or individual and estimating their spatial positions. This technique is widely used in applications such as human pose estimation for activity recognition, gesture recognition, and human-computer interaction.

3D Pose Estimation: 3D pose estimation takes the process a step further by estimating the pose parameters in three-dimensional space. It involves recovering the three-dimensional coordinates of the object or individual from the visual data. This technique finds applications in robotics, augmented reality, virtual reality, and autonomous navigation.

Challenges in Pose Estimation

Pose estimation is a challenging task due to various factors, including occlusions, scale variations, viewpoint changes, and complex object shapes. Additionally, the accuracy of pose estimation heavily relies on the quality and quantity of training data, as well as the robustness of the underlying algorithms.

Occlusions: Occlusions occur when parts of the object or individual are hidden or overlapped by other objects or body parts. Handling occlusions is crucial in pose estimation, as missing or incorrectly estimated key points can lead to inaccurate pose estimation.

Scale Variations: Pose estimation algorithms must be able to handle scale variations, where the size of the object or individual changes. This is particularly important in applications such as sports analysis, where athletes can appear at different distances from the camera.

Viewpoint Changes: Estimating pose accurately despite viewpoint changes is a significant challenge. Object or individual appearances can vary drastically depending on the camera's viewpoint, making it difficult to establish correspondences between image features.

Complex Object Shapes: Pose estimation becomes more challenging when dealing with objects or individuals with complex shapes. The algorithms must be able to handle non-rigid deformations and accurately estimate the pose parameters.

Real-World Applications

Pose estimation has found numerous practical applications across various domains.

Robotics: In robotics, pose estimation is essential for tasks such as object manipulation, robot localization, and mapping. Accurate pose estimation allows robots to interact with their environment, navigate autonomously, and perform complex tasks.

Augmented Reality: Pose estimation is a fundamental component of augmented reality systems. It enables the alignment of virtual objects with the real-world scene, creating immersive and interactive experiences. Applications range from virtual try-on in e-commerce to gaming and virtual training simulations.

Human-Computer Interaction: Pose estimation plays a crucial role in human-computer interaction, enabling natural and intuitive interactions. It facilitates gesture recognition, body tracking, and hand pose estimation, allowing users to control devices and interfaces without physical contact.

Sports Analysis: In sports analysis, pose estimation is used to track athletes' movements, analyze techniques, and provide insights for performance improvement. It enables coaches and analysts to gain a deeper understanding of players' actions, leading to more effective training strategies.

Deep Learning in Pose Estimation

Deep learning has played a significant role in advancing the field of pose estimation. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) have been successfully employed to learn rich feature representations from visual data, improving the accuracy of pose estimation algorithms. Additionally, architectures like Hourglass Networks and PoseNet have been specifically designed for pose estimation tasks, leveraging the power of deep learning to achieve state-of-the-art results.

Dataset Challenges and Solutions

The availability of diverse and well-annotated datasets is crucial for training accurate pose estimation models. However, creating such datasets can be challenging and time-consuming. Collecting ground truth pose annotations for large-scale datasets requires manual effort and expertise. To address this, researchers have developed synthetic datasets using computer graphics techniques, which provide a large amount of labeled data for training. Transfer learning techniques have also been employed to alleviate the need for large amounts of labeled data by using pre-trained models on related tasks.

Real-Time Pose Estimation

Real-time pose estimation is a demanding requirement for many applications, such as robotics and augmented reality. Achieving real-time performance while maintaining high accuracy is a significant challenge due to the computational complexity of pose estimation algorithms. Researchers are constantly working on optimizing algorithms and leveraging hardware acceleration, such as Graphics Processing Units (GPUs) and specialized hardware like Field-Programmable Gate Arrays (FPGAs) and Tensor Processing Units (TPUs), to achieve fast and efficient pose estimation.

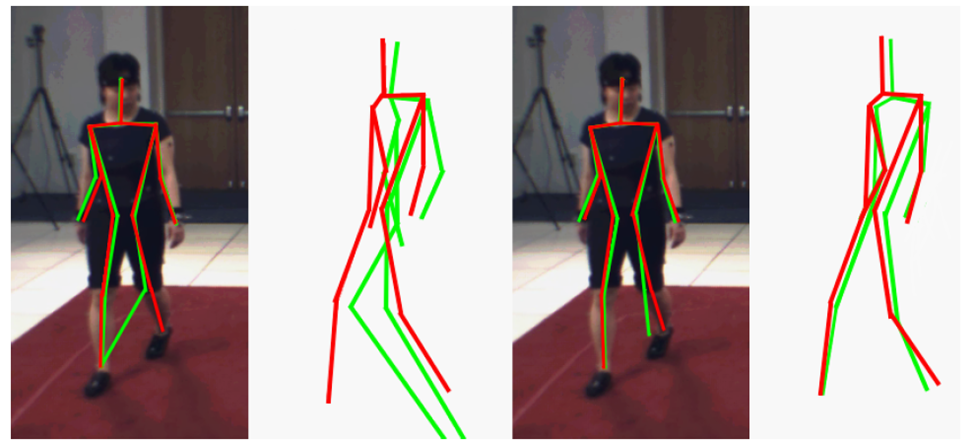

Human Pose Estimation in Saiwa

Human Pose estimation refers to detecting key point locations that describe the overall shape (skeleton) of an object. In saiwa, we provide human pose estimation to jointly detect human body, hand, facial, and foot key points in a single image. Two popular pose estimators are represented: Open Pose and Media Pipe. Open Pose is a multi-person bottom-up method while Media Pipe is top-down and in its standard version, it is single person. Different set of key points is extracted using each method that you may find the details in the corresponding white paper. Human pose estimation has wide applications such as motion analysis, action recognition and more. We have already made a sample yet useful service using pose estimation, i.e., “Corrective Excursive”. This service is also available with a free public demo in your user panel under Advanced Services category.

Conclusion

Pose estimation has revolutionized computer vision, enabling machines to understand and interact with the physical world. It has found applications in robotics, augmented reality, human-computer interaction, and sports analysis, among others. Despite the challenges posed by occlusions, scale variations, viewpoint changes, and complex object shapes, ongoing research and advancements in deep learning techniques continue to improve pose estimation accuracy. As pose estimation continues to evolve, we can expect even more exciting applications and possibilities in the future.

Comments

Post a Comment